Estimating IT Acquisitions:

Large information technology acquisitions are expensive, lengthy projects in their own right. So it makes sense that they need to be estimated using formal techniques. We’ve reviewed multiple potential size drivers for these estimates, and the only one with a strong value as a cost estimating relationship (CER) is the value of the acquisition (we use size in $millions). The adjusting variables are acquisition type (new, replace, upgrade); diversity of stakeholders; funding source (state, federal, mixed, benefit); and procurement approach (single step or multi-step). |

|

|

| Level 4 was awarded a contract to provide information technology governance support to the GSA’s new OASIS contract. OASIS is expected be the largest information technology services contract of its type ever managed. |

|

|

All times are Pacific Time!

Free WebEx

Estimating with ExcelerPlan

7/10, 11 AM-12:30 PDT

7/17, 11 AM-12:30 PDT

7/31, 11 AM-12:30 PDT

8/7, 11 AM-12:30 PDT

8/14, 11 AM-12:30 PDT

8/21, 11 AM-12:30 PDT

8/28, 11 AM-12:30 PDT

To register for demos, email:

Edward@portal.level4ventures.com |

|

|

Free Self-Paced Estimation Training

Receive 24 Professional Development Units (PDU) of training credit by taking our free on-line cost estimation training. (Certificate requires verification of attendance plus passing final exam.)

To register for training, email:

Edward@portal.level4ventures.com |

|

|

What kind of Internal Rate of Return (IRR) are you looking for in your IT projects? 10%? 20%? 25%? How does 200% sound? That’s the typical hard dollars return that you’ll achieve on an estimation process improvement initiative, and that doesn’t even include the secondary benefits that you’ll realize from improved project governance.

Most organizations currently use an activity/role based estimation approach, where each work group that may participate in a project estimates their effort component, and the results are then collected and summed. This requires that many of your top performing staff attend enough meetings and read enough documents to understand and estimate the work required, at a typical cost of 2% to 3% of the project budget. The next time you’re in one of those meetings, count up the meeting cost in labor alone.

Tool based estimation processes are much more efficient, consistently delivering better accuracy with a 50% reduction in estimation effort required. This is largely because far fewer people need to prepare the estimate, so smaller meetings and fewer eyes reading documents. So if a tool based estimation process improvement initiative saves your organization 1% of your total IT budget, it’s not hard to see how a 200% Internal Rate of Return is not only possible, but typical.

Edward

Director of Sales and Marketing

|

|

|

|

|

|

| Order ExcelerPlan during July or August and receive one free month of maintenance and support (13 versus 12). That’s a $2K savings. |

|

|

|

|

|

|

Remove the Kid Gloves: I’ve noticed that when it comes to critical management decision making, information technology typically “gets a pass” in ways that would be unheard of with other departments. I see this with measures of productivity relative to industry norms and over time. I see this with quality metrics. I see this with cost data, especially cost to the component (business requirement) level. Information Technology has claimed that these problems are unsolvable in their domain, and they’ve gotten away with it. But I can assure you that they are not unsolvable, and you’re missing a huge competitive edge if you accept life without this information. Remove the Kid Gloves: I’ve noticed that when it comes to critical management decision making, information technology typically “gets a pass” in ways that would be unheard of with other departments. I see this with measures of productivity relative to industry norms and over time. I see this with quality metrics. I see this with cost data, especially cost to the component (business requirement) level. Information Technology has claimed that these problems are unsolvable in their domain, and they’ve gotten away with it. But I can assure you that they are not unsolvable, and you’re missing a huge competitive edge if you accept life without this information.

To measure productivity, you need to capture size and effort. Size represents the work that was delivered, and there are several standard sizing measures available. The most popular one is function points or function point equivalents, but pretty much any measuring approach that has the word “points” in it will work if consistently applied. Quality can be measured by capturing size and defects. Your test team will have a defect tracking system have a count of the defects, so size is the only necessary additional variable. Allocating labor cost to individual business requirements can be done with simple ratios (use the size attributable to each business requirement divided by the entire project size).

william@portal.level4ventures.com

|

|

|

|

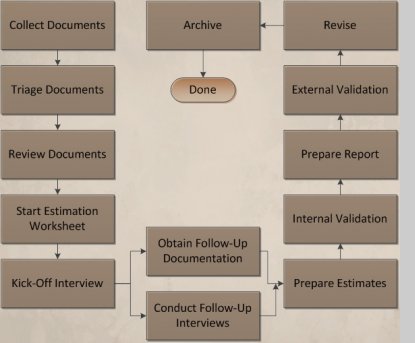

Estimation Process (part 1): If you’re relatively new to the world of cost estimation, it can be a little intimidating trying to figure out where to begin. The conversation can quickly degenerate into a discussion of models, SLOC or function points, power curves, and other elements that don’t solve your immediate problem of, “What now?” So let’s walk through the life of an estimate from the perspective of a cost estimator. Estimation Process (part 1): If you’re relatively new to the world of cost estimation, it can be a little intimidating trying to figure out where to begin. The conversation can quickly degenerate into a discussion of models, SLOC or function points, power curves, and other elements that don’t solve your immediate problem of, “What now?” So let’s walk through the life of an estimate from the perspective of a cost estimator.

You’ll begin by collecting together all of the documentation you can find about the project or work to be performed. This can take quite a bit of effort, because the documents may be scattered around, especially at the early stage of a project. You should then do a quick triage of the documents. Documents that deal with scope, requirements, inclusions, exclusions, etc. are good. If the document has a list of things (interfaces, reports, etc.), hang onto it. Documents that are out of date, that duplicate material in other documents, or that are too generic to be useful are not useful. Some may be borderline. You’ll then carefully read the good (and possibly borderline) documents, highlighting portions that pertain to the estimate. Finally, extract the elements of the documents that are relevant to the estimate into an estimation worksheet. You’re then ready to conduct the estimation interview, which I’ll discuss in more depth next issue. |

|

|

|

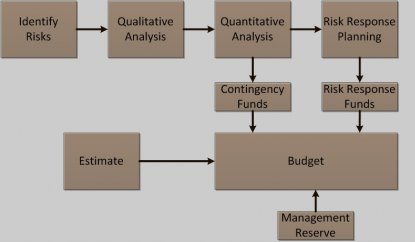

Budgeting for Project Risk: Most organizations consider themselves fortunate when the estimate is complete and they’re able to use that estimate to create a project budget. But by stopping with the estimate, they’re inserting a statistical bias into the budgeting process that will result in project overruns on a statistical basis. If we look at the risk management process (whether PMBOK or ISO31000 based), we find that risk management involves risk identification; qualitative analysis; quantitative analysis; and risk response planning. Risk response planning involves proactively taking action that will reduce either the probability or impact associated with a given risk (or the converse for opportunities). Many of those proactive actions will have a definite cost associated with them, and that cost will definitely be incurred. For example, suppose that we were concerned about the liability associated with a data breach during the project. One response might be to take out an insurance policy that includes coverage for that possibility. The cost of that insurance policy is a project expense, and if it is not included in the budget, the project be short of money. Risk response funds should be part of the project baseline budget. Budgeting for Project Risk: Most organizations consider themselves fortunate when the estimate is complete and they’re able to use that estimate to create a project budget. But by stopping with the estimate, they’re inserting a statistical bias into the budgeting process that will result in project overruns on a statistical basis. If we look at the risk management process (whether PMBOK or ISO31000 based), we find that risk management involves risk identification; qualitative analysis; quantitative analysis; and risk response planning. Risk response planning involves proactively taking action that will reduce either the probability or impact associated with a given risk (or the converse for opportunities). Many of those proactive actions will have a definite cost associated with them, and that cost will definitely be incurred. For example, suppose that we were concerned about the liability associated with a data breach during the project. One response might be to take out an insurance policy that includes coverage for that possibility. The cost of that insurance policy is a project expense, and if it is not included in the budget, the project be short of money. Risk response funds should be part of the project baseline budget.

There will be other areas where we may identify contingency plans as a mechanism for dealing with risk. Requirements related to those contingency plans may have a cost, but it is a probabilistic cost. Most IT projects deal with budgets for contingencies by adding a percentage to the overall project budget, typically between 5% and 20%. These funds are normally included in the project but not initially allocated. The release of these funds is normally under the control of the project manager.

Finally, we know that across a portfolio of projects, some projects will encounter totally unexpected problems or opportunities, and additional funds will need to be provided to these particular projects. It is a good idea to establish a management reserve across your portfolio of projects specifically for this purpose. This management reserve is normally under the control of your information technology governance committee, and it will typically range between 2.5% and 5% of the portfolio value. The funds may be budgeted at the portfolio/department level, or at the project level. |

|

|

|

Dear Tabby: Dear Tabby:

I’ve been asked by my significant other to estimate how many programmers it takes to change a light bulb. Do you have any advice?

signed, Electrified in El Cajon

Dear Electrified:

The answer is none…because that’s a hardware problem. 🙂 But if you’d like to estimate the engineering hours to stand up some hardware it’s best to work in terms of the number and complexity of environments rather than at the level of individual servers. Empirical evidence demonstrates that this is more accurate, and as we cats say, trying to work at the level of each individual box will just have you chasing your tail.

|

|

|

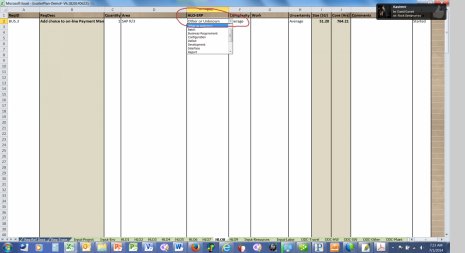

Dealing with Unknowns: You may notice that most of the ExcelerPlan estimation input drop downs include a choice for Unknown or Other. Believe it or not, this seemingly simple option represents a fundamental ExcelerPlan design philosophy and feature. In the world of estimation, we are dealing with unknowns all of the time. At the beginning of the project there will typically be more things we don’t know than the things that we do know. Our third generation estimation tools (and all competitor tools) required that the estimator make an educated guess in this situation and enter data based on that guess. This worked from a tool calculation perspective, but the fact that this answer was actually unknown was lost in the process, leading to a false sense of precision in the results. By accepting unknown as a valid input, we correctly capture what is known and what is not known about a project. Behind the scenes, unknown values are treated as the average of the values within the list, but with a high degree of uncertainty contributed to the plus or minus range of the overall estimate. Dealing with Unknowns: You may notice that most of the ExcelerPlan estimation input drop downs include a choice for Unknown or Other. Believe it or not, this seemingly simple option represents a fundamental ExcelerPlan design philosophy and feature. In the world of estimation, we are dealing with unknowns all of the time. At the beginning of the project there will typically be more things we don’t know than the things that we do know. Our third generation estimation tools (and all competitor tools) required that the estimator make an educated guess in this situation and enter data based on that guess. This worked from a tool calculation perspective, but the fact that this answer was actually unknown was lost in the process, leading to a false sense of precision in the results. By accepting unknown as a valid input, we correctly capture what is known and what is not known about a project. Behind the scenes, unknown values are treated as the average of the values within the list, but with a high degree of uncertainty contributed to the plus or minus range of the overall estimate. |

|

|

|

|

|